HeavyJobs

Revision as of 14:22, 15 April 2008 by Ward Cunningham (talk | contribs) (→Bugs and Todos: worker marking)

What (summary)

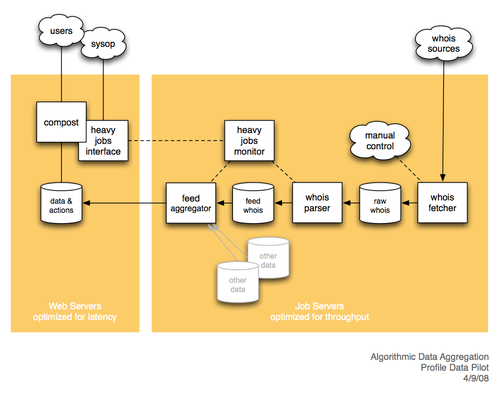

Manage long-running jobs on available compute resources (servers) using db tables to keep track of work, and inter-process communication to keep track of workers.

Why this is important

We will use this infrastructure to manage our algorithmic data collection. This is a strategic direction for the company.

DoneDone

We will be satisfied with this infrastructure when:

- we can launch, balance, and diagnose all steps of our pilot whois refresh path.

- fetchers

- parsers

- aggregators

- we have startup scripts that will resume proper job processing after a machine reboot

- we can monitor overall health of all heavy job processing with zabbix, including system administrator alerts

Bugs and Todos

(prioritized high, medium and low for this week.)

- A worker should mark a chunk with its id (array of ids when restarted)

- this lets us draw a line per worker on throughput graph

- Workers should do partially completed chunks before starting new chunks.

- A worker should terminate when a manager has no more work to do

- unless there is a stream of new chunks from another job

- or do we want an autostart mechanism (getting too complex)

- unless there is a stream of new chunks from another job

- Integrate the two controllers (how to be determined)

- show chunk id in heavy_jobs/show

- show ps of workers in heavy_worker/status

- kill or restart hung workers

- move fetchers into framework, have it create parsing chunks

- Tally throughput, good records, etc

- keep a log of automatic actions

- Should HeavyJob be the source for actions?? Need better requirements here.

- Finer-grained progress

- Zabbix script to count busy and idle workers. (Or count something else interesting.)